Hello👋, here is a person who stumbled into the field of AI by accident and still hasn’t left. He graduated from the Gaoling School of Artificial Intelligence at Renmin University of China with a bachelor’s degree.

His research interests lie primarily in Natural Language Processing (NLP), with a focus on LLM test-time scaling through approaches including frameworks and post-training.

He is still seeking PhD opportunities. It’s a long journey, but he never lacks the perseverance to grow through adversity.

🔥 News

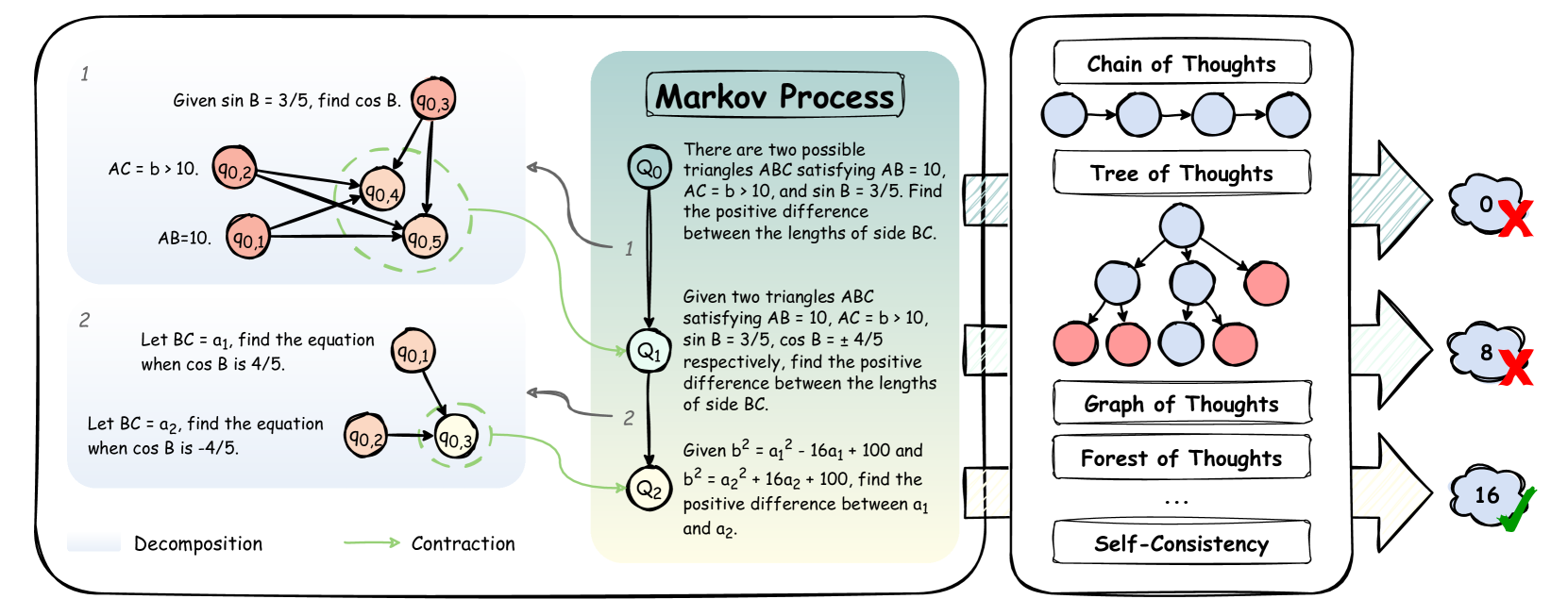

- 2025.09.19: 🥳🥳 AoT is accepted by NeurIPS 2025!

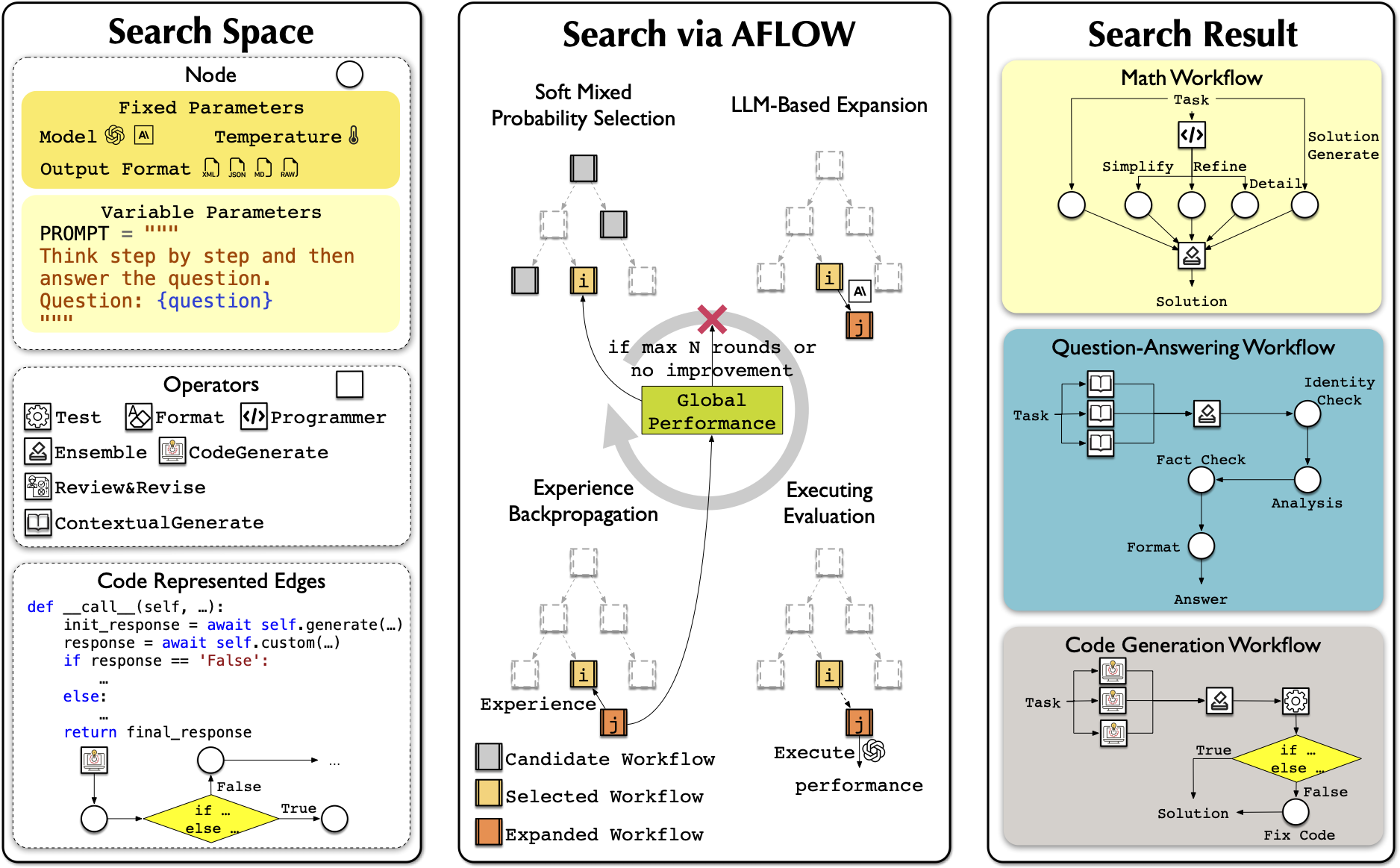

- 2025.02.11: 🥳🥳 AFlow is accepted by ICLR 2025 as an Oral!

📝 Selected Publications

🎖 Honors and Awards

- 2024.06 Alibaba Global Mathematics Competition AI Challenge - Third Place Award🥉 (3rd out of 563 teams) ($2000)

[code]

- 2023.12 Baidu & FounderPark AGI Hackathon - Second Place Award🥈 (¥10000)

[code]

📖 Educations

- 2020.09 - 2024.06 B. Eng in Artificial, Renmin University of China, Gaoling School of Artificial Intelligence Beijing, China

- Graduation thesis recommendation

💬 Invited Talks

Atom of Thoughts for Markov LLM Test-Time Scaling

-

2025.06 Invited Talk at BAAI 2025, Beijing Academy of Artificial Intelligence

-

2025.04 Invited Talk at HKUST(GZ) LLM Seminar, hosted by Prof. Zhijiang Guo

-

2025.03 Invited Talk at HKUST NLP Group, hosted by Prof. Junxian He

-

2025.03 Invited Talk at Monash Medical AI Group (MMAI), hosted by Dr. Lie Ju

📅 Internships

- 2023.09 - 2024.01 Kwai Technology

- Research Focus: LLM-based Agents; Advanced Data Analysis

- 2023.05 - 2023.07 Deep Space Symphony

- Research Focus: Music-Driven Motion Diffusion; Controllable Generation

[

[ [

[ [

[